Asset-Centric Orchestration: Focus on "What," Not "How"

Why data engineering is stuck in a binary deadlock

I've built data platforms for years, and one thing is clear: modern "no-code" ingestion tools promise the moon but often leave you hanging. The current research establishes that data engineering is stuck in a binary deadlock. Organizations must choose between the Managed Service Tax (convenience at the cost of opaque billing) and the Operational Tax (flexibility at the cost of human capital).

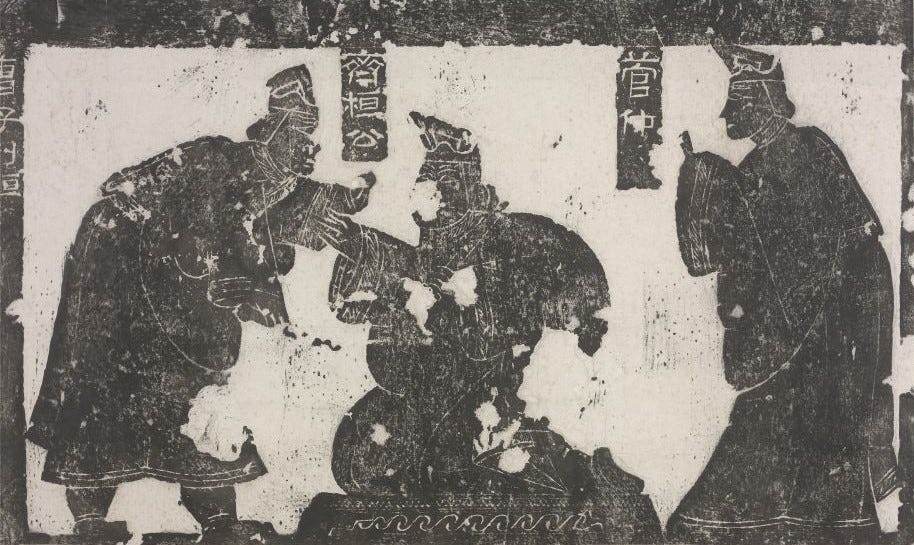

Two thousand years ago, the Chinese statesman Guan Zhong faced a familiar dilemma: tax people directly and risk revolt, or lower taxes and starve the state. His solution was neither. He eliminated most direct taxes entirely and moved revenue into infrastructure—salt, iron, trade—making taxation predictable, indirect, and almost invisible.

Modern data ingestion is stuck in the same deadlock. Managed services impose a hidden tax through opaque pricing and resync shocks. Code-first pipelines impose an operational tax through human toil. As a engineering leader who has faced late-night firefighting and “data swamps,” I’ve realized that the era of fragmented “best-of-breed” tooling is concluding, replaced by integrated platforms that offer streamlined workflows at the cost of increasing vendor lock-in and financial unpredictability.I believe the industry shall follow the third path: shifting cost upstream into structure, specs, and contracts—so ingestion stops taxing people at all.

The Binary Deadlock: Fivetran, dbt, and the “Fusion” Shift

The October 2025 merger between Fivetran and dbt Labs created a unified big data ETL player. This signals a shift toward integrated stacks where ingestion and transformation are unified. However, this consolidation brings risks for all the data folks:

The Licensing Divergence: While dbt Core remains Apache 2.0, the "future vision" for transformation lies in dbt Fusion. Written in Rust, Fusion is licensed under the Elastic License v2 (ELv2)—a "source-available" license that prohibits using it to provide managed services to others.

The Innovation Tax: As dbt Core enters "maintenance mode" innovation is reserved for proprietary engines, forcing organizations into a "dangerously dependent" relationship with a single vendor.

GUI-First vs. Specification-First: The Hidden Operational Costs

Airbyte and Fivetran shine with slick UIs. But that magic comes at the cost of control. When engineering talent spends 30% to 40% of their time "firefighting"—fixing broken pipelines and resolving sync failures—the long-term health of the platform enters a state of terminal decline.

Ingestion Debt: According to the 2024 Global Data Engineering Report, 64% of data leaders report that technical debt significantly limits their ability to achieve business goals.

The “Fragility Loop”: Driven by pressure to deliver, engineers adopt brittle scripts that create a “data death cycle,” where every minor change requires manual oversight and eventually consumes 60% to 80% of the team’s capacity.

“Open-Source” Connectors: the limits and solution

Meltano and the Singer ecosystem offer a code-first illusion, but the reality is a maintenance nightmare of outdated taps. To solve the brittleness of ad-hoc fixing the single opensource provider, I advocate for Spec-Driven Data pipeline, which declares expected input and desired output. The connectors shall be serving as purely technical providers rather:

Precision over Prompting: Spec-Driven Development should use formal, machine-readable specifications as a single source of truth for both input and output. This structured collaboration aims for 95% or higher accuracy in implementing specs on the first attempt.

Spec-Driven Workflow: By moving intellectual effort “upstream” to the Specify and Plan phases, teams capture the “why” behind technical choices, preventing “intent-vs-implementation drift”. Of course the tehnical aspects of connectors and data movers are still present, but they are not so important and can be replaced with other providers if there is such need.

Data Contracts: Beyond “Bring First-Decide Later”

The traditional approach of ingesting raw data and transforming it later leads to data swamps. Data quality issues cost organizations an average of $12.9 million annually.

Fail-Closed Validation: We declare machine-readable YAML contracts at the source. If a source system change violates the contract, the deployment is rejected—a “fail-closed” gate that prevents downstream pollution.

Schema-as-Code: By versioning every asset's schema in Git and running strict validation at ingestion time, we would treat data movement with the same rigor as application code.

Asset-Centric Orchestration: Focus on “What,” Not “How”

Traditional orchestrators like Airflow are task-centric, focusing on workflow execution. We should utilize an asset-centric approach in order to keep an eye on the thing which is really important - “What” we are expecting to get as the result of data pipeline.

Native Lineage: In an asset-centric model, the focus have be on the data products produced. Then lineage is captured automatically in a unified graph, making it easier to trace origin and transformation.

One Job per one Asset: If we would enforce a one-asset-per-job design pattern, this will simplify retry logic and ensures that if one of fifty tables fails, we only re-run that specific job rather than retrying a massive, entangled pipeline.

The “Third Way”

After slogging through these limitations, I built dativo-ingest to test the hypothesis of breaking the binary deadlock.

Headless & Config-Driven: No UI needed; pipelines are defined in YAML under GitOps, making deployments auditable.

Lakehouse-Native: It writes Apache Iceberg tables directly and update metadata via Nessie, ensuring ACID semantics and propagation of FinOps tags directly into table properties.

Metadata-Driven Production Readiness: I specifically designed it for high-scale environments like Databricks Lakeflow, generating production-ready code automatically from formal specs.

I am going to continue posting about my findings, but one more time to underline - Dativo Ingest isn’t just about connectors - they are expremely replacable in the YAML configurations. The ineventables there are constraints—like upfront schemas and tags—because they force the discipline required to escape the data death cycle and reclaim technical agency.